For the last four months, I've been working on my master's thesis—Suggestive Drawing Among Human and Artificial Intelligences—at the Harvard Graduate School of Design. You can read a brief summary below.

The publication intends to explain what Suggestive Drawing is all about, with a language that, hopefully, can be understood by artists, designers, and other professionals with no coding skills.

You can read the interactive web publication or download it as a PDF.

For the tech-savvy, and for those who would like to dive in and learn more about how the working prototype of the project was developed, I'm preparing a supplemental Technical Report that will be available online.

We use sketching to represent the world. Design software has made tedious drawing tasks trivial, but we can't yet consider machines to be participants of how we interpret the world as they cannot perceive it. In the last few years, artificial intelligence has experienced a boom, and machine learning is becoming ubiquitous. This presents an opportunity to incorporate machines as participants in the creative process.

In order to explore this, I created an application—a suggestive drawing environment—where humans can work in synergy with bots1 that have a certain character, with non-deterministic and semi-autonomous behaviors. The project explores the user experience of drawing with machines, escapes the point-and-click paradigm with a continuous flow of interaction, and enables a new branch of creative mediation with bots that can develop their own aesthetics. A new form of collective creativity in which human and non-human participation results in synergetic pieces that express each participant's character.

In this realm, the curation of image data sets for training an artificially intelligent bot becomes part of the design process. Artists and designers can fine tune the behavior of an algorithm by feeding it with images, programming the machine by example without writing a single line of code.

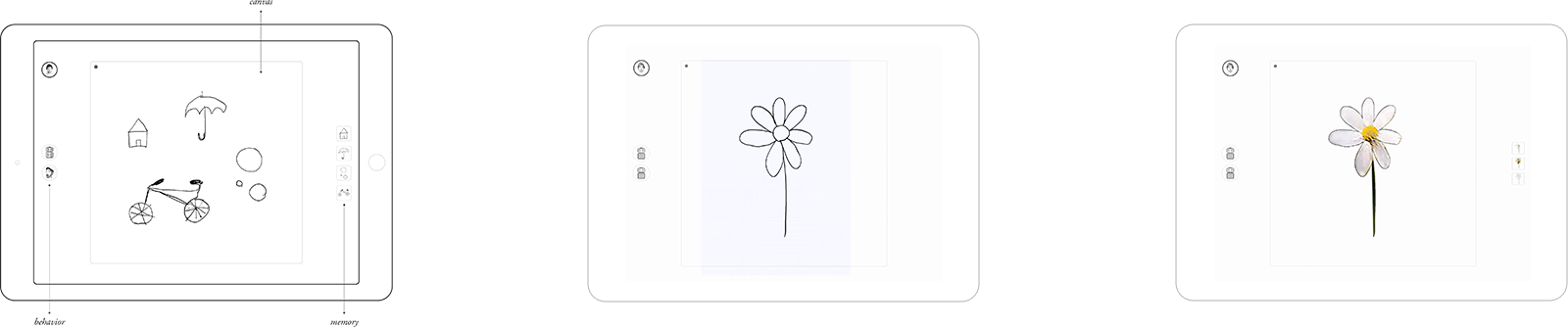

The application incorporates behavior—humans and bots—but not toolbars, and memory—as it stores and provides context for what has been drawn—but no explicit layer structure. Actions are grouped by spatial and temporal proximity that dynamically adjusts in order not to interrupt the flow of interaction. The system allows users to access from different devices, and also lets bots see what we are drawing in order to participate in the process. In contrast to interfaces of clicks and commands, this application features a continuous flow of interaction with no toolbars but bots with behavior. What you can see in the following diagram is a simple drawing suggestion: I draw a flower and a bot suggests a texture to fill it in. In this interface, you can select multiple human or artificial intelligences with different capabilities and delegate tasks to them.

I developed three drawing bots—texturer, sketcher, and continuator—that suggest texture, hand-sketched detail, or ways to continue your drawings, respectively. Classifier recognizes what you are drawing, colorizer adds color, and rationalizer rationalizes shapes and geometry. Learner sorts drawings in order to use existing drawings for training new bots according to a desired drawing character, allowing the artist to transfer a particular aesthetic to a given bot. In training a bot, one of the biggest challenges is the need to either find or generate an image data set from which bots can learn.

This project presents a way for artists and designers to use complex artificial intelligence models and interact with them in familiar mediums. The development of new models—and the exploration of their potential uses—is a road that lies ahead. As designers and artists, I believe it is our responsibility to envision and explore the interactions that will make machine intelligence a useful companion in our creative processes.

Thanks so much for reading.

According to the English Oxford Dictionary, a bot is an autonomous program on a network (especially the Internet) which can interact with systems or users. ↩